We live in an age of instant gratification and a continual demand for quick information that’s accessible 24/7/365.

Speed is the name of the game. Consider a website’s page load time, for example. If your mobile website takes more than three seconds to load, an estimated 53% of users will abandon your site.

When it comes to connectivity, speed is equally as salient. Whether you are using a fiber-optic, copper, or wireless network, latency can have a serious impact on your business, your employees, and your customers.

Though the need for greater bandwidth is often discussed in our industry, it’s imperative to not overlook latency in your network, either.

Here’s a brief examination of why it’s important to improve your network latency – and a few ways you can improve it.

…But first, what is latency?

We briefly discussed latency in our recent article about the benefits of fiber-optic networks, but to understand the importance of improving your network latency, we need to go a little more in-depth about what it is.

The best way to define latency is as a delay. Specifically, latency consists of the time it takes for a packet (or group) of data – sent along a network – to get to its destination. The delay time is measured in milliseconds.

When it takes a long time for a packet of data to be sent to a physical destination across a network, you have a high latency network connection. When it takes a short amount of time for a packet of data to be sent to a physical destination, you have a low latency network connection.

Latency isn’t usually just caused by one factor. Instead, it’s usually caused by a few things: your hardware, your Internet connection, where a remote server is located, and more.

Real-world examples of latency

Have any of these things ever happened to you?

- You sent an email to your colleague…and it took longer than expected to reach their inbox.

- You were running a remote meeting and trying to share your screen, and it took a long time for the screen to load.

- You were running a remote meeting, and what you said wasn’t received in real time by the other attendees.

- You were on a conference call with one or more attendees using an IP Phone or IP Voice Termination where there was a significant delay, causing individuals to talk over one another.

- You were remotely accessing your company’s MRP/ERP system, and you experienced a session timeout or significant delays to access data.

- You were running a remote back-up and experienced a session time-out, or it required an excessive period of time to complete.

- It seemed to take forever to download a file someone emailed to you.

- You clicked on a website and had to wait for it to load completely.

- You clicked on a website, and though it started loading, not everything appeared at once. Instead, image by image loaded in a successive order.

That was latency.

The negative effects of latency – and why it’s important to improve your network latency

In addition to the real-world examples above, latency can have several negative effects on your business.

Let’s delve into these a bit so we can explore why it’s important to improve a network’s latency.

1) Latency impacts your network’s bandwidth (throughput)

If your network has a high amount of latency, the bandwidth (also known as your throughput) will be affected as a consequence. Bandwidth is defined as “the volume of information per unit of time that a transmission medium (like an internet connection) can handle.”

If you have a lot of latency in your network, the bandwidth can decrease as result, so you won’t be able to send as much data. It’s worth noting that although your bandwidth won’t always be affected by your latency and may only last a few seconds, it can develop into a continuous problem.

As Boris Rogier explains in Performance Vision article: “When latency is high, it means that the sender spends more time idle (not sending any new packets), which reduces how fast throughput grows.”

2) Latency is a problem for any product or service involved in the Internet of Things (IoT)

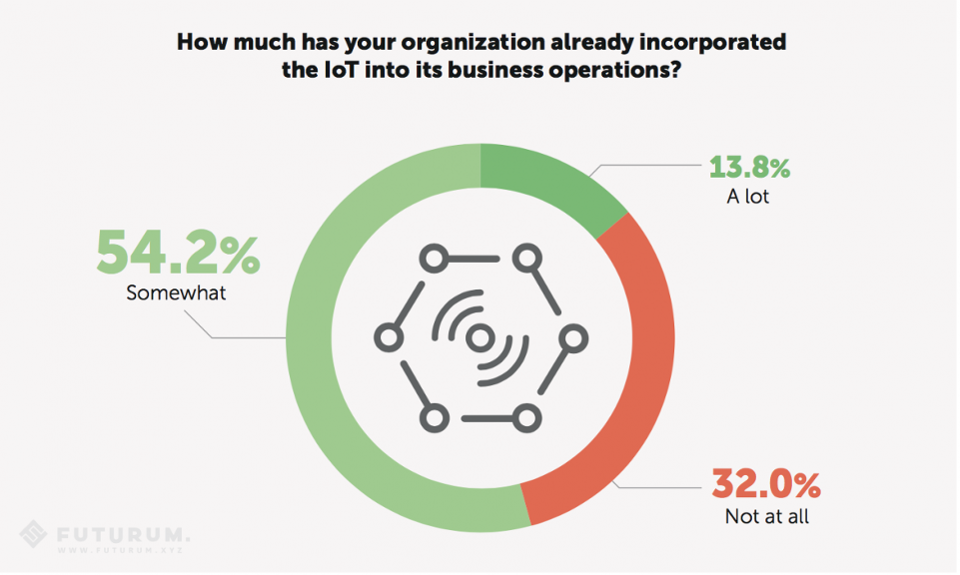

IoT is more than just a buzzword – it’s affecting more and more businesses and vastly changing the way we view, receive, access, and use data. In a 2017 business study, for instance, 2/3 of companies reported that they were using the IoT in their operations:

The IoT is predicted to continue to grow this year – especially in industries like healthcare, retail, and supply chain.

The problem with the IoT and latency is that a high amount of latency can influence the efficacy of the devices – or, well, the “things” in the Internet of Things.

And this can have serious consequences.

Lori MacVittie writes in an article for Network Computing (UBM):

“Many IoT sensors can’t tolerate latency issues associated with cloud, created new challenges for networking…. Now, my Fitbit doesn’t make decisions for me, unless it’s to be an annoying reminder that I’m not walking enough, but there are plenty of cases where things do make decisions. And some are increasingly life-and-death decisions, such as shutting down part of an assembly line when one of the components is about to overheat and possibly malfunction, or worse, explode. A variety of systems are increasingly making decisions based on data collected from the Internet of Things, whether industrial or consumer, and some of them rely on nearly instantaneous feedback. Delays could realistically mean the difference between life and death.”

The problem is that the things (e.g., sensors, gadgets, controls, etc.) of the IoT depend on the responsiveness of a system or network to work effectively. High latency means delayed responsiveness. With delayed responsiveness comes the inability of the things to function to their full capacity – or even as they need to.

As we can expect the IoT to grow, we can also expect the need to for improved latency to grow, too.

3) Latency creates problems for enterprise collaboration applications

Many companies have switched to cloud-based tools like Workbase, Asana, Skype, Slack, Evernote, Dropbox, SharePoint, and Microsoft Office 365 to share and store data, work on projects, and facilitate communication internally and externally.

These enterprise collaboration applications have many benefits, but if your network connection is plagued by high latency, you may experience lags or delays in your applications.

4) Latency affects your business’s productivity (and inherently, net profit)

So, let’s say you are one of the many businesses using an enterprise collaboration application, or any other cloud-based software.

What might happen if your network connection experiences high degrees of latency?

- The enterprise collaboration application may stop working

- A meeting might be halted

- Employees will have to wait for things to load or download

- Your IT team will have to fix the continual problems with your systems and won’t be able to focus on other problems or duties

- If you are hosting a webinar, remote demo, or meeting over an application, you may not be able to – or it might be dragged out due to slow loading times

In other words, your business’s productivity could go down – all because of latency.

This, in turn, could affect your net profit. As Sharon Bell (CDNetworks) writes in a HighQ blog post:

“Ultimately, enterprise collaboration apps may improve productivity, but their latency issues could also account for unpredicted expenses to maintain stability and usefulness, which could slow down the growth of your bottom line.”

In summary: the importance of improving your network’s latency

As you’ve seen, latency can have several adverse effects on your business.

And the ones highlighted above are just some of many.

Thus, if you want to have greater bandwidth…

…and if you are taking part in the IoT and/or thinking about taking part in the IoT and need the devices to run effectively…

…and if you regularly use enterprise collaboration applications…

…or any cloud-based tool, really…

…and if latency is affecting your business’s productivity…

It’s vitally important to improve your network’s latency.

6 Ways to Improve Your Network’s Latency

Good news! There a few things you can do to reduce the amount of latency in your network:

1) Ensure your network hardware and software can process packets quickly

Processing delays are a kind of latency that measures the packet processing time of your hardware or software.

To prevent processing delays, you should ensure that your network hardware and software are capable of processing packets quickly.

Some steps you can take to do this include:

- Keeping your switches or router updated

- Checking the configuration of your router or switches

- Reviewing your access control lists

- Making sure any software your business uses is continually updated

- Keeping your servers up to date

Note: these steps should be completed on a case-by-case basis and may not apply to your situation. The best bet is to evaluate your system and determine what is the most applicable

2) Sending less data over your network and/or increasing the capacity of your bandwidth

To specifically prevent serialization delays (also known as transmission delays) – the amount of time it takes to insert packets of data onto a network link – there are two main things you can do:

- Send less data. To do so, you might, say, utilize caching mechanisms or change your network applications.

- Increase the bandwidth of your network. More bandwidth = less serialization days.

3) Consider using an Ethernet-over-Fiber connection

As we explained in a recent article, fiber-optic networks have less latency than their copper counterparts.

By using a fiber-optic network, you’ll be less likely to experience delays, and thus, any software, hardware, or system you use on your network won’t be as negatively affected.

4) Consider using a private line network for your enterprise WAN

When an enterprise has multiple sites that can be served through Ethernet-over-Fiber, consider establishing direct connectivity (VLANs) between the sites.

When subscribing with one or more providers for direct connectivity between sites, providers will generally guarantee a maximum one-way delay or route-trip delay.

This type of guarantee can’t be provided when delivering traffic between sites over the top of the public Internet because there is no way to control the path your traffic and the number of Internet routers it will pass through (number of hops).

If you’re using Amazon AWS, Microsoft Azure, Google Cloud, or another major cloud service provider for your enterprise applications, consider a private cloud connection.

If a service provider has a PoP at one or more major metropolitan datacenters, they more than likely can provide you with a direct connection, or maybe even diverse-protected connections, to your cloud provider’s network.

5) Consider using a provider with direct connectivity to content providers or content delivery networks (CDNs)

Streaming video is sensitive to bandwidth and latency. It can be the killer application for many content providers and Internet service providers (ISPs).

Content providers and ISPs want to improve the quality of experience for their subscribers when it can be done in a cost-effective manner.

Content providers will distribute servers closer to the edge with ISPs who meet a minimum threshold of sustained traffic usage. Consider using an ISP who meets these thresholds and has on-net servers from Netflix, Google, Facebook, and others.

There are also CDNs at major metropolitan datacenters where content providers and ISPs can directly exchange traffic with one another. In this situation, one could advise that you use an ISP who maintains one or more CDNs to improve the speeds and quality of the services you receive over the Internet.

The worldwide web was originally built through a web of connections between multinational carriers (Tier 1s) and smaller carriers purchases services to connect to these Tier 1s. As the Internet has grown, so have the number of providers and the amount of bandwidth on each providers’ network.

When ISPs connect directly with one another or peer with one another through an Internet exchange (IX), the greater the chance you will experience fewer hops and lower latency to get your traffic from point A to point Z.

Lastly, consider using an ISP that not only purchases transit Internet Access Service from a multinational Tier 1 carrier, but one who also connects with regional and local ISPs directly or through an IX.

6) Evaluate how your provider guards against Distributed Denial of Service (DDOS) attacks

Interactive gamers are big on the Internet, and today, these gamers can order up DDOS attacks to slow their opponents down.

DDOS attacks slow down other gamers by flooding an ISP’s connection with excessive traffic. Unfortunately, if the ISP isn’t properly guarded against such attacks, it can affect all the users of one or more ISPs.

As such, one way to reduce latency in your network to evaluate how your provider guards against these DDOS attacks. Consider using an ISP who operates and maintains a real-time DDOS detection and mitigation system, such as one provided by a company like Crorero and/or one who buys it from a Tier 1 or Tier 2 transit IP provider such as Level3.